ALEXA: That could be great, if only……

Inhaltsverzeichnis

Well, again breaking with tradition, this article will be in English. In short, it’s about Amazon’s semi-awesome concept of doing voice processing in the cloud and triggering different actions upon certain keywords recognized. I came in touch with it at Amazons Echo workshop in Munich, 3/23/17, and ordered an Echo Dot right away to play with it.

1. Preface Inhalt

A word on technology and to technology-driven companies in general: Dear product owners, for the love of god, please have your company and techs in the basement create technology that makes live easier. Rant commencing…

- I do not want to tell the intelligent microphone my live’s story before a light turns on.

- „Alexa, lights on“ = good. „Alexa, please turn the lights on“ = bad.

- „Oven, 180 degrees, hot air“ = good. „Alexa, please set the oven to 180 degrees and switch on the hot air program“ = very bad.

Red parts show way too long of an interaction. Amazon, your competition is the human pressing a button in like 0.2 seconds. Be better than that.

- Why do you make it so hard for developers to actually build great stuff?! Smart Home appliances only talking to closed off cloud systems, Alexa system grabbing voice input for some control and smart home patterns before I can process in my custom skill, …

- And lastly, please stop patronizing your customers. You never know exactly or completely what your customer wants. Let the early-adaptors play with your product. Beneficial for both of us: we get our dream solution, you get publicity and one or two ideas for free!

By the way, I’m not the first to address this, see his slide #10 from April 2016, nearly one year ago. He has EXACTLY the same points.

Now, let’s see how far we get with the Alexa Product. And yes, I will not anthropomophize it – it’s not a she….yet.

2. System Arcitecture Inhalt

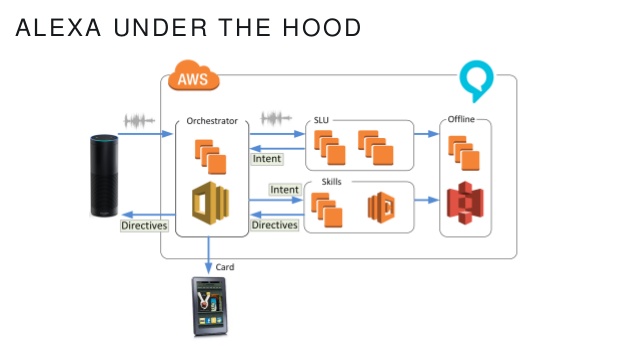

Not particularly complicated, the system architecture.

- Great microphone array is always open and listens for a specific phrase, e.g. „alexa“.

- After that, all input is streamed to the cloud service for about 8 seconds.

- Audio data is processed by a „spoken language understanding“ thingi (probably large ANN bought from Poland) for human voice. Also known as speech-to-text or voice recognition. Actually, here the voice is also seperated into an „intent“ (=program to call) and „slot variables“ (arguments to said program).

- Now the intend module (of a particular „skill“) is called, does some action and generates a spoken response sent back to eEcho. I’m not certain if the Echo does the voice synthesis on its own hardware, or if it just plays back an audio stream.

Ideally, the echo device would not need LAN access at all und just have the cloud computers calculate voice responses and other actions.

Also note that for now, they say no echo device is allowed to send commands to the local network. I don’t know if that’s still true as for smart home discovery some people say it does local SSDP broadcast to search for devices.

The awesome parts are that:

- voice-to-text processing seems to work extremely well, with both German and English,

- even with music playing from the Echo speaker, the system will usually recognize my input,

- it seems to cope with different speakers really well.

The less-awesome parts are that:

- Amazon has put many hooks in place which grab user input and route it to Amazon systems (e.g. for music playback, weather, traffic information, …),

- customizing reactions to voice commands is cumbersome and limited at the moment („custom skills“),

- it’s a very cloud driven solution.

While I accept that for voice processing, there are so many use cases that cannot be generalized for the public and are only valid for single installations. In order for this system to grow beyond the toy-factor, Amazon will need to open up to the development community much more. See above rant. And also the bad customer reviews for the majority of the skills….

3. Skills and Skills… Inhalt

In order to make use of the voice command system, you’ll need to create your own „skill“ (bunch of callable programs) with one or more „intents“ (programs to call). However, there are different skill types:

3.1 Smart Home Skill Inhalt

- automatically reacts to any phrases typically met in such a context („turn on“, „turn off“, „dim up“, „dim down“, …)

- has predefined intents („on“, „off“, „dim to“, „dim up“, „dim down“, „set temperature“, …) – no other commands, such as color, program or similar

- mandatory OAuth account linking (you can use Amazon OAuth „login with amazon“)

- can only directly call AWS lambda functions, not your own https server. However, AWS lambda function can in turn call your dyndns’d home server script and you do the translation into local REST-requests there.

- no custom responses, only „OK“ or „error with skill“

- Afaik, no back and forth supported („Alexa, are all lamps off?“)

- Afaik, only one lamp per input (no „turn on bathroom and kitchen“)

Unfortunately, it’s not possible to define rooms or other criteria (e.g. building story) for the voice engine to match to. For now, it only accepts a name.

I don’t know what’s best practice. Given a lamp in a room, I named it e.g. „living room“, so I can go „Alexa, living room off“. If I have two lamps in the living room, I wouldn’t know what to do, since I cannot say „turn off lamp one in the living room“, afaik. They say that a devices additional information [1-4] is not being used by the voice processor.

3.2 Custom Skill Inhalt

In order to overcome these difficulties, I then tried the Custom Skill variant. And boy, does this looked great at first.

- Custom https script callable (json data in PHP’s $HTTP_RAW_POST_DATA)

- Upload self-signed certificate

- Have the Echo speak any response you want, you define it in a text response.

- No AWS lambda required

- custom phrases („utterances“) and custom intents and slots, you automatically get the closest match from a list of choices

Being more intelligent than the system, I chose „lights“ as activation word of my skill and was looking forward to building the best voice command structure ever. So, I fed it all the phrases I could think of:

- LightsIntent off in {room}

- LightsProgram program {number} for {lightsname}

- LightsColorIntent color to {color} for {lightsname}

- …you get the idea…

Ideally, I’d activate my custom skill with „Alexa, lights“ and then one of the custom phrases with one or more slots would get sent to my server, which in turn would issue the appropriate REST commands to the appliances.

Unfortunately, this only worked in the simulator in the Alexa developement console.

As I said before, everything remotely sounding like a Smart Home related command, even the unsupported color commands, is not sent to your custom skill. Instead, Echo will tell you that there are no Smart Home devices or that this command is not available for the specific device.

3.3 The best of both worlds Inhalt

Alright, so, in order to still have a usable system, I created both a Smart Home Skill and a Custom Skill.

- The Smart Home Skill would control some lamps I have and the heating. I’d put everything with regards to discovery into the AWS lambda and forward the extracted device name and command arguments („on“, „off“, „setPercent“) via REST to my own server ignoring certificate verification.

- The Custom Skill would control the sophisticed lamps (offering light programs and colors via REST interface locally). It is activated by „Scheinwerfer“, German for floodlight. One of the words the Smart Home engine does not react to.

Since it can talk to my server directly, only the processing changes on my server: I don’t get a GET request, but rather a POST with a lot of JSON data in the POST body. After doing a JSON-decode, I can issue the appropriate command to the lamp’s gateway.

!! Even though Amazon probably doesn’t want this, I’d wish for:

- Disable their smart home commands – not possible at the moment afaik

- Create a custom Skill that can have a reserved activation word („lights“) – not possible at the moment afaik

- Put my own Intents and Type Fields there – works

- Define an AWS lambda suite to process my requests – works

- Put my own Intent handlers there – works

- Give an Intent handler the option of defining a LAN-local URL („http://192.168.20.10/turnon“) to call: – not possible at the moment afaik

- Echo would relay the request in my LAN,

- and return the response to my AWS lambda function

- The Echo could relay discovery, control and query operations

- Enable an intent handler to define a response text – not possible at the moment for Smart Home skills

- Enable more attributes for Smart Home devices

- room

- maintenance group (DE: „Gewerk“)

With this, possible more Smart Home owner could use their own systems and gateways from so many different vendors with the Alexa system without going through the FHEM hassle.

4. Some caveats I’ve noticed so far… Inhalt

- Bought the latest album „Under Stars“ by dear Amy from Amazon into my account, however, the system would only play excerpts if told to play the „latest album by amy mcdonald“. Telling it the album name directly worked.

- Mixed language input – essential for music control – is very early stage for now. Even more so if you have funny spelling, such as „(r)Evolution“ by Hammerfall.

- Nearly everything soundling like a smart home command, i.e. having the words „lamp“, „light“, „LED“, etc., in it, will go to the smart home processing engine. And since that is very limited at the moment, e.g. no color commands, no program numbers, I cannot build the awesome smart home voice interface I was hoping for.

- For smart home eco system, you cannot define rooms, but see above.

Bislang keine Kommentare vorhanden.

Einen Kommentar hinterlassen